Computers Yesterday and Today

|

||

Computers Yesterday and Today

By Dick Yates The nameless Neolithic man who hacked out the first wheel may well be the world's most celebrated caveman, but for sheer creative genius he had a strong rival in one of his less publicized contemporaries: the hairy character who first discovered that by manipulating his own fingers he could "describe" all quantities between one and ten. By so doing, he not only founded the science of mathematics (whose decimal system, based on varying powers of ten, is forever linked to the fact that human beings have ten fingers), but at the same time he was operating the world's first digital computer. After this world-shaking discovery, it was only a question of time before counters other than fingers came into use - columns of pebbles laid on the ground, pellets of bronze or ivory that slid back and forth on a grooved board, beads strung on wires within a frame. All these devices gradually evolved into the abacus - the standard calculating instrument of all the civilizations of antiquity, and still widely in use throughout the Far East. Each of the abacus' parallel bead-strung wires represents one place in the notation system (units, tens, hundreds, and thousands) and each holds two groups of beads: one of five beads, each representing a single unit of that power; and one of two beads, each representing five units. Learning to use an abacus takes time, but surprisingly enough, an experienced operator can perform computations as fast as a man working a modern desk calculator. Early Calculating Machines Only after the passage of many centuries was the first major advance over the abacus made. In 1642, the French philosopher and scientist Blaise Pascal - then only 19 years old - invented the first true adding machine; Pascal's calculator was the first in a long and illustrious line of mechanical calculating devices. Twenty years later, in England, Sir Samuel Morland developed a more compact calculator that could multiply (by cumulative addition) as well as add and subtract. And in 1682, the German Wilhelm Leibnitz perfected a machine that could perform all four basic arithmetical functions as well as the extraction of square roots. Leibnitz' principles are still employed in modern calculating machines, the only major difference being the introduction of electric power to speed up the movement of the mechanical parts.

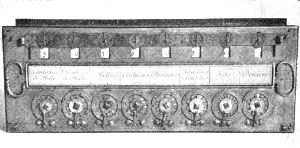

Pascal's calculator of 1642. The device was "programed" by turning the bottom wheels. Each revolution of a wheel caused the adjoining wheel on the left to advance one notch, or digit. The numbers behind the little windows provided "read-out." The six wheels on the left operated on the decimal system and could handle numbers up to 999,999. The two divisions at far right were for use in adding so us and deniers, French money of the time. Another great name in the development of automatic computation is that of Charles Babbage, a mathematics professor at England's Oxford University, who in 1812 designed what he called a "difference engine" for mechanically performing advanced mathematical calculations "without mental intervention." Neither that machine nor a later Babbage invention, the "analytical engine," proved practical for general manufacture because of the technological limitations of the period, but Babbage's designs remain valid today. The logical organization of many modern electronic computers bears a remarkable similarity to those of his "engines." The next important development was the mechanical tabulator capable of simultaneously registering horizontal and vertical sums and of processing large amounts of data rapidly in sequence. The first of these machines, designed as an aid to statistical analysis, was invented in 1872 by Charles Seaton, then chief clerk of the United States Bureau of the Census. This was followed in 1887 by the work of Dr. Herman Hollerith, also a Census Bureau official, who adapted a punched-paper control system to statistical work. His punched-card methods, together with those developed in 1890 by another American, James Powers, laid the groundwork for the now-familiar punched-card tabulating systems. Electronic Computers As early as 1919, electronics came tentatively onto the scene, when an article by W. H. Eccles and F. W. Jordan, published in the first issue of Radio Review, described an electronic "trigger circuit" that could be used for automatic counting. But the Eccles-Jordan circuit, like the Babbage difference engine, was ahead of its time. Then came World War II. Under the pressure of military needs for ballistics data on newly developed weapons, the new science of electronic data processing came into its own. The intensive effort of those years produced two basic types of electronic computers - analog and digital - and the distinction is an important one to bear in mind, Analog systems differ from digital ones in that they use varying physical and electrical magnitudes (voltages, light intensities, shaft positions and the like) as factors analogous to mathematical values, rather than pulses representing actual numbers. Just as the abacus is a simple digital computer, the slide rule (on which mathematical values are expressed in terms of linear relationships) is an analog device. So is the automobile speedometer, whose mechanism does not actually count the revolutions of the wheel and repeatedly divide to determine the number of miles per hour, but rather senses the rate of revolution and interprets that rate in terms of a reading on a miles-per-hour dial. Most of the wartime needs were for analog computers, many of which were successfully built under government contract at a number of American universities. In certain cases, however, machines were required which would provide answers to ballistics equations faster and with greater precision than analog systems were capable of doing. It was the attempt to fulfill these specifications that gave rise to the development of digital computing systems. In 1944, at Harvard University, Dr. H. H. Aiken completed a semi-electronic system called the Automatic Sequence Controlled Calculator, known also as the Harvard Mark I, for the Navy's Bureau of Ordnance. And in the next few years Dr. Aiken built three improved models, known as the Harvard II, Mark III and Mark IV.

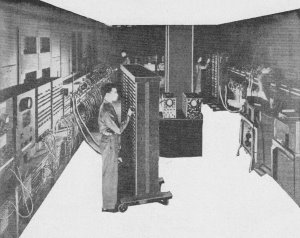

ENIAC, the first "all-electronic" digital computer, was installed in 1945 at the University of Pennsylvania.

The modern trend in computer design is typified by Remington Rand's latest Univac model. Use of transistors makes the entire system extremely compact and even more reliable than tube-operated computers. The ENIAC Meanwhile, a second major contribution was progressing at the University of Pennsylvania's Moore School of Engineering. Early in 1943, an associate professor of electronics named Dr. J. W. Mauchly gave the Army Ordnance department the design for a general-purpose, all-electronic digital computer called the ENIAC, which was ultimately completed in 1945. The first problem assigned to the ENIAC was a calculation in nuclear physics which would have taken 100 man-years to solve by conventional methods. The ENIAC came up with the answer in two weeks, of which only two hours were spent in actual computation, the remainder being devoted to operational details and reviews of the results. ENIAC represented the first major break with the past in that it was entirely electronic except for its means of "input" and "output" (the process of feeding data into the machine and of delivering the results); unlike the Mark I, however, it was not automatically sequenced. Modern computers can thus be said to have evolved from a wedding of the techniques employed in ENIAC and Mark I. Other pioneer work during the war and immediate postwar years included projects at such organizations as: Princeton's Institute for Advanced Study, where outstanding developments were made by the late Dr. John von Neumann; Bell Laboratories: M.I.T.; and the National Bureau of Standards. Enter Univac After the war, Dr. Mauchly joined in partnership with Prof. J. Presper Eckert, who had been chief engineer of the ENIAC project, and the two men formed a company in Philadelphia to develop new computers and promote their use in commercial applications. The Eckert-Mauchly firm, which later became a subsidiary of Remington Rand Inc. (now, in turn, a division of Sperry Rand Corporation), was responsible for the development of the Univac in 1950. Generally regarded as the most successful electronic data processor in the world today, and certainly the most famous, the large-scale Univac system was the first to handle both numerical and alphabetical information equally well. It was also the first to divorce the complex input and output problems from the actual computation operation. Particularly important was another major innovation from an earlier Eckert-Mauchly model: the Univac was wholly self-checking. It checked its own accuracy in each step of each computation and thus eliminated the need for running problems through a second time for verification. With the Univac, electronic data processing came of age. The many post-Univac computers produced in the past few years have further opened a new era in man's ability to organize and make use of factual information. Electronic computation has already brought about substantial changes in patterns of living, and scarcely a week goes by without someone's finding a new use for computers, a new way in which electronic data automation can be applied to eliminate the drudgery of making complex calculations "by hand." Meanwhile, rapid strides are being made in the further refinement and development of computers themselves, particularly in the miniaturization and improvement of their components through the use of smaller and more reliable transistors, resistors, diodes, etc. Proper Perspective In the first tumult of publicity about computers during the early fifties (particularly when the Univac won national prominence for successfully predicting the outcome of the 1952 Presidential election), the misleading term "giant brain" caused a good deal of confusion-and some uneasiness - with its implication that science had given birth to a thinking device superior to the human mind. Nowadays, most people know better. They know that, by human standards, the "giant brain" is a talented "idiot," that it is wholly dependent on instructions and thus can't really think at all-that it is, in other words, only a machine. This simmering down of the public's "gee-whiz" attitude toward computers is a healthy sign, for no tool can ever be truly useful if it inspires awe in its users instead of trust. To the same end, it's a good idea to think of the computer in its historical perspective - not as an overnight phenomenon, but as the fruit of a practical science with its roots far in the past. Pascal, Leibnitz, Babbage and the others, if they were alive today, would probably not be astonished by the "miracle" of electronic data processing. More likely, they would simply be pleased to find that their pioneer work had been brought to fulfillment.

Posted November 27, 2020 |

||

Far from bursting on the scene fully developed,

computers have been in a state of constant evolution for over 300 years

Far from bursting on the scene fully developed,

computers have been in a state of constant evolution for over 300 years